Abstract

Solving complex Partial Differential Equations (PDEs) accurately and efficiently is an essential and challenging problem in all scientific and engineering disciplines. Mesh movement methods provide the capability to improve the accuracy of the numerical solution without increasing the overall mesh degree of freedom count. Conventional sophisticated mesh movement methods are extremely expensive and struggle to handle scenarios with complex boundary geometries. However, existing learning-based methods require re-training from scratch given a different PDE type or boundary geometry, which limits their applicability, and also often suffer from robustness issues in the form of inverted elements.

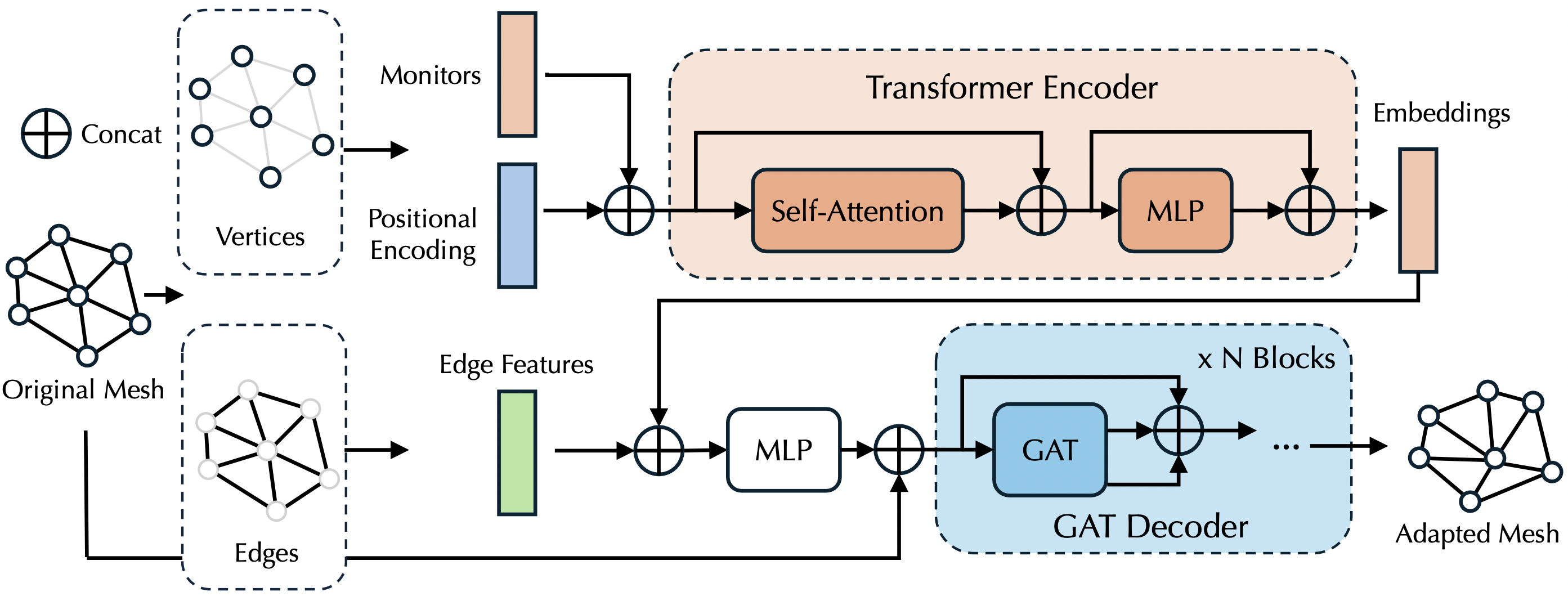

In this paper, we introduce the Universal Mesh Movement Network (UM2N), which -- once trained -- can be applied in a non-intrusive, zero-shot manner to move meshes with different size distributions and structures, for solvers applicable to different PDE types and boundary geometries. UM2N consists of a Graph Transformer (GT) encoder for extracting features and a Graph Attention Network (GAT) based decoder for moving the mesh. We evaluate our method on advection and Navier-Stokes based examples, as well as a real-world tsunami simulation case. Our method outperforms existing learning-based mesh movement methods in terms of the benchmarks described above. In comparison to the conventional sophisticated Monge-Ampère PDE-solver based method, our approach not only significantly accelerates mesh movement, but also proves effective in scenarios where the conventional method fails.

Framework: The proposed UM2N framework. Given an input mesh, vertex features and edge features are collected separately. The coordinates and monitor function values are gathered from the vertices and input into a graph transformer to extract embeddings. The embedding vector obtained from the graph transformer encoder is then concatenated with the extracted edge features to serve as the input for the Graph Attention Network (GAT) decoder. The decoder processes this combined input along with a mesh query to ultimately produce an adapted mesh. .

BibTeX

@article{zhang2024universalmeshmovement,

author = {Mingrui Zhang and Chunyang Wang and Stephan Kramer and Joseph G. Wallwork and Siyi Li and Jiancheng Liu and Xiang Chen and Matthew D. Piggott},

title = {Towards Universal Mesh Movement Networks},

journal = {Advances in Neural Information Processing Systems},

volume = {36},

year = {2024},

url = {https://arxiv.org/abs/2407.00382},

}